基于mapreduce的电商购物用户行为分析及可视化flumehbase

舟率率 12/23/2025 springbootmapreducehbaseflumejava

# 项目概况

flume_hbase (opens new window)

# 数据类型

天池淘宝用户行为数据

# 开发环境

centos7

# 软件版本

python3.8.18、hadoop3.2.0、jdk8、hbase2.2.7、flume1.6.0

# 开发语言

python、shell、SQL

# 开发流程

数据上传(flume)->数据预处理(mapreduce)->数据分析(mapreduce)-->数据抽取(java)->数据存储(hbase)->可视化(springboot)

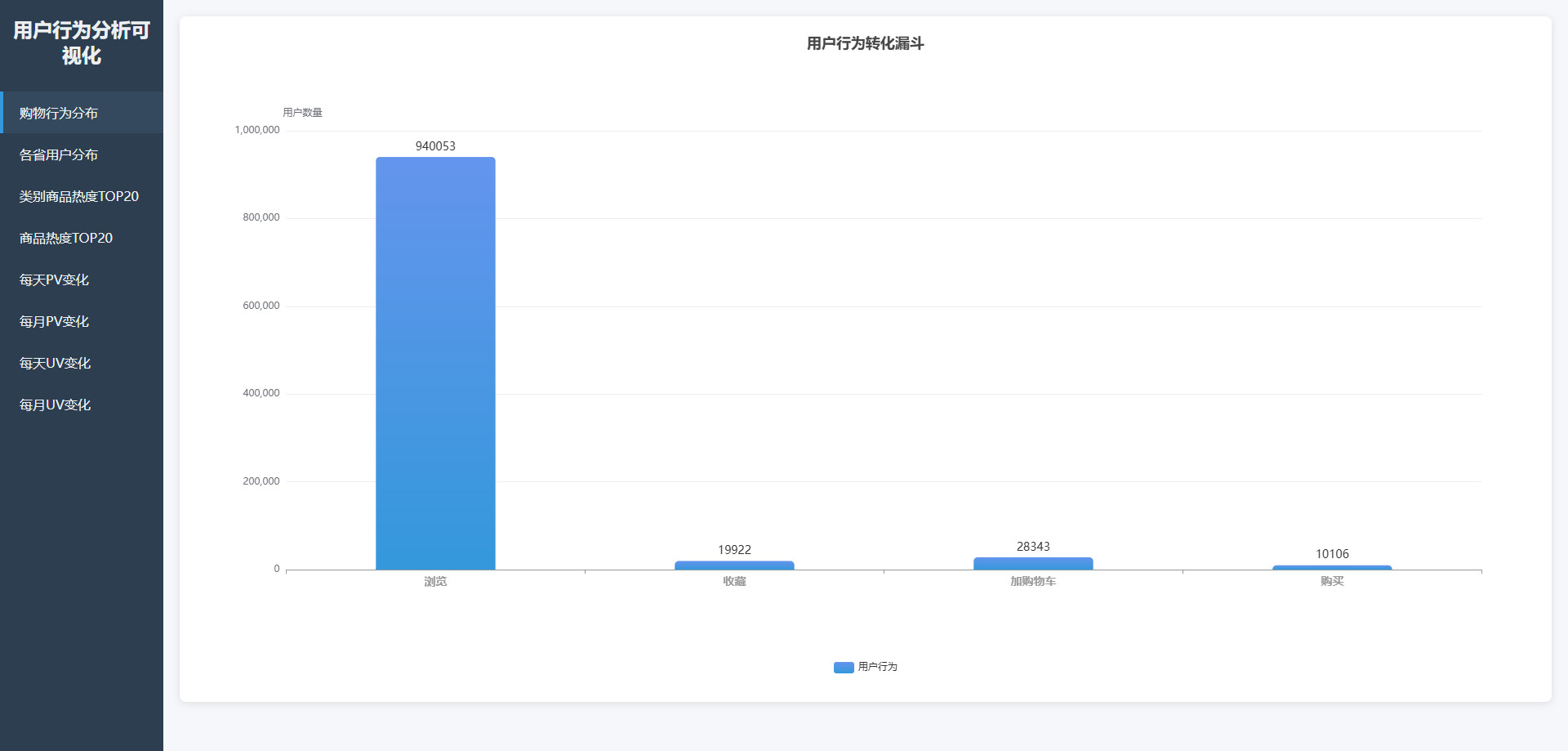

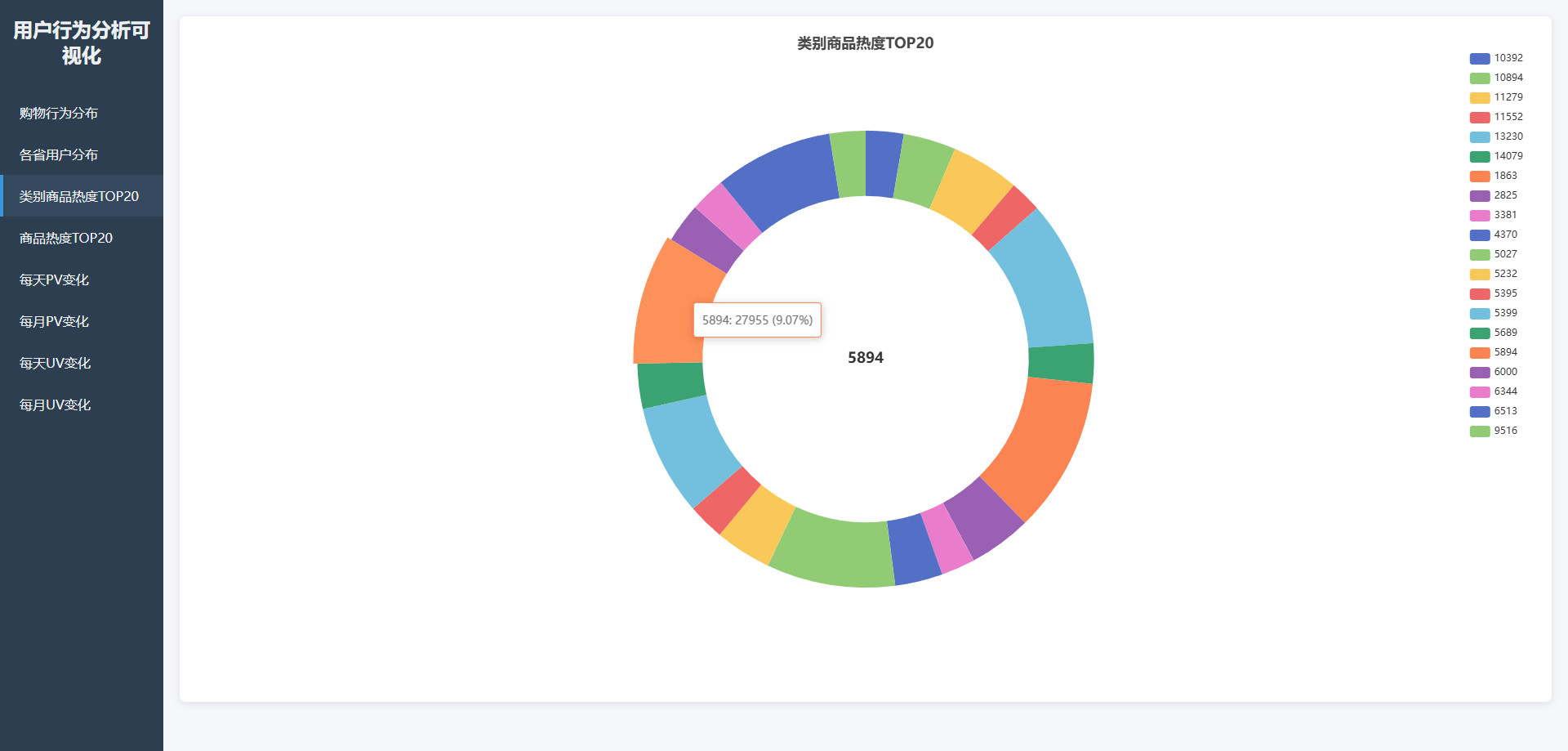

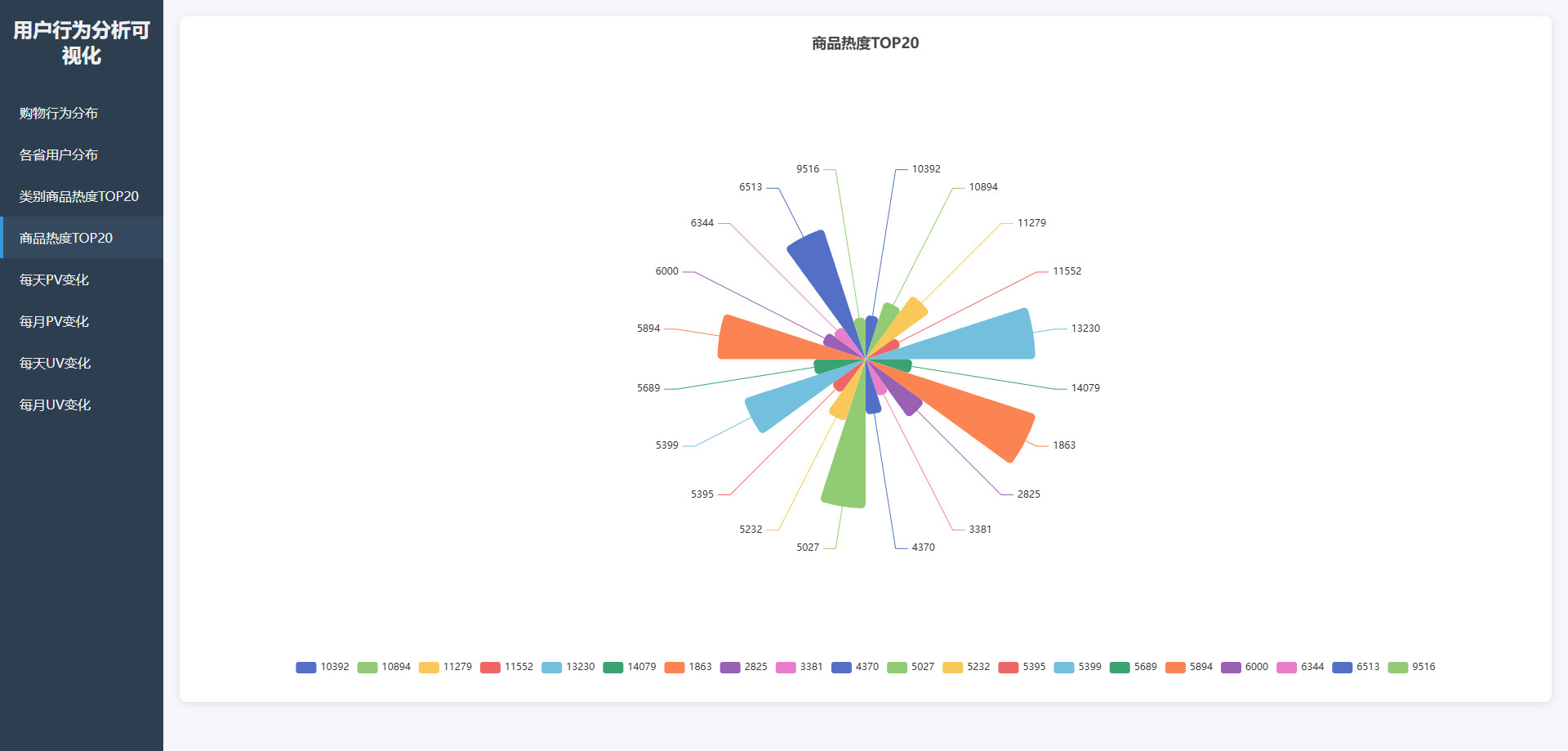

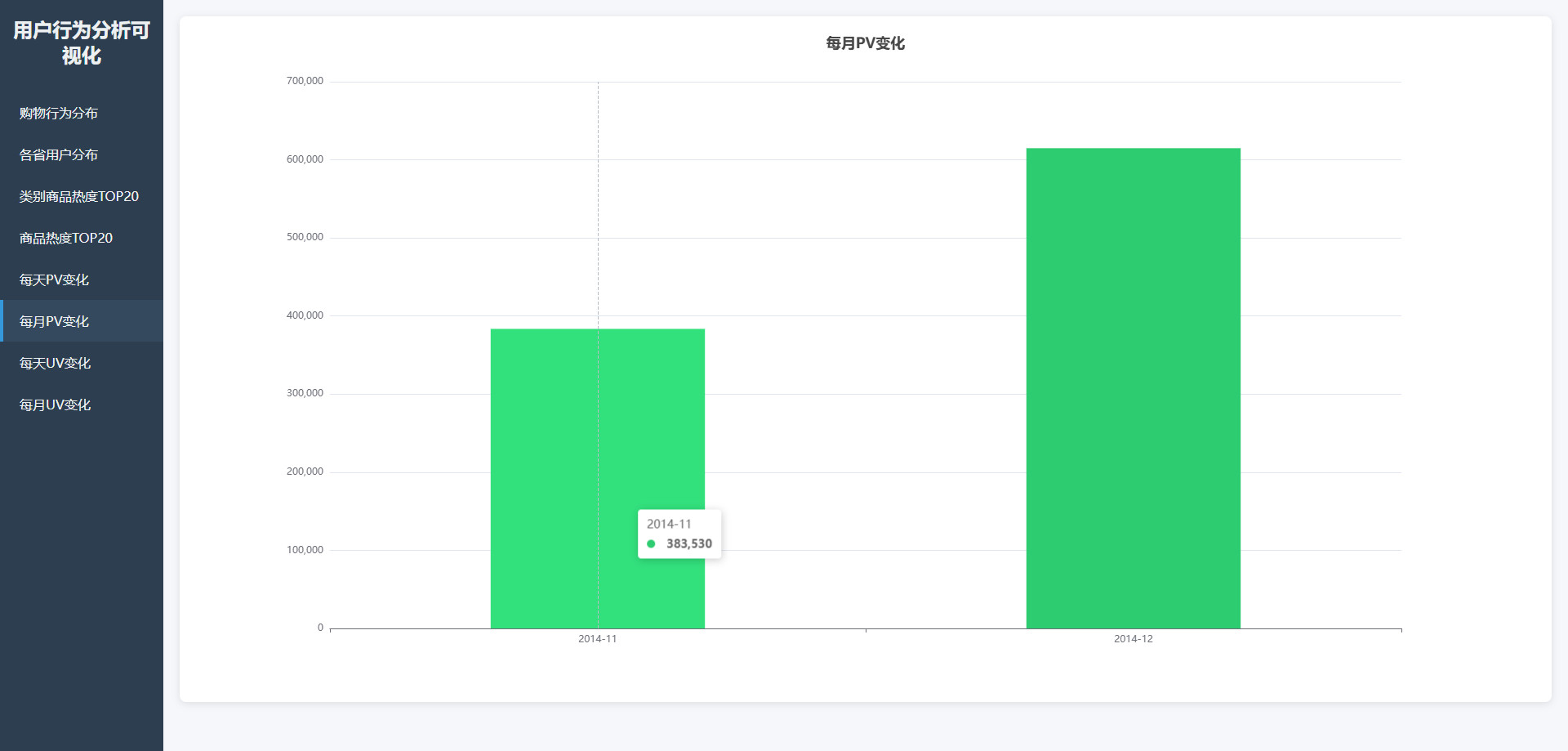

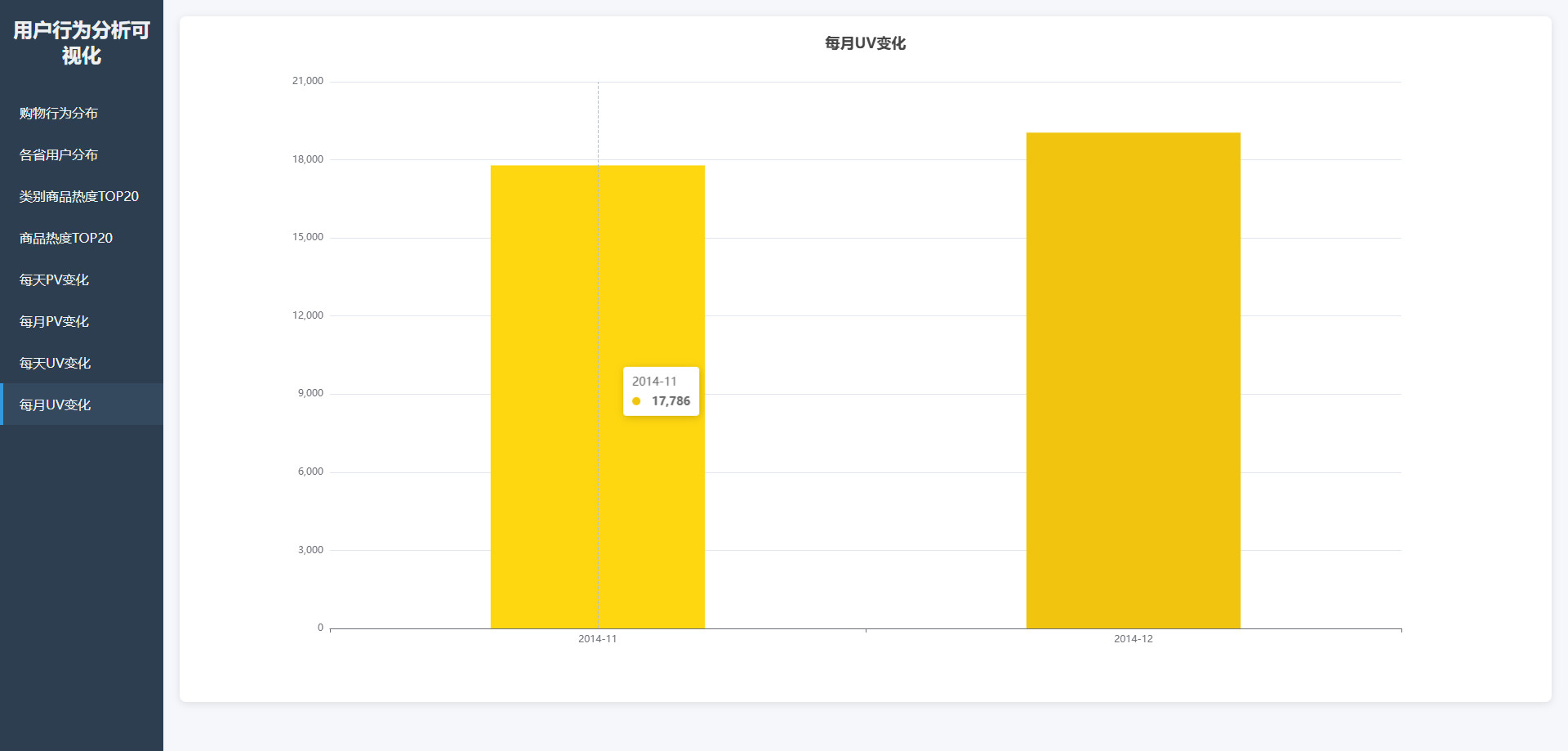

# 可视化图表

# 操作步骤

# 启动Hadoop

# 离开安全模式: hdfs dfsadmin -safemode leave

# 启动hadoop

bash /export/software/hadoop-3.2.0/sbin/start-hadoop.sh

1

2

3

4

5

2

3

4

5

# 启动hbase

# 启动zookeeper

/export/software/apache-zookeeper-3.6.4-bin/bin/zkServer.sh start

# 开启hbase

sh /export/software/hbase-2.2.7/bin/start-hbase.sh

# 进入hbase shell

/export/software/hbase-2.2.7/bin/hbase shell

# 关闭hbase

sh /export/software/hbase-2.2.7/bin/stop-hbase.sh

# 关闭zookeeper

/export/software/apache-zookeeper-3.6.4-bin/bin/zkServer.sh stop

1

2

3

4

5

6

7

8

9

10

11

12

2

3

4

5

6

7

8

9

10

11

12

# 数据集上传

# 创建目录

mkdir -p /data/jobs/project/user/

# 进入目录

cd /data/jobs/project/

# 解压 "data" 目录的 user_behavior_100W.7z 压缩包

# 上传 user_behavior_100W.csv 到 /data/jobs/project/ 目录下

# 查看前面5条记录

head -5 user_behavior_100W.csv

1

2

3

4

5

6

7

8

9

10

11

12

2

3

4

5

6

7

8

9

10

11

12

# 启动flume

# 上传 source_dir_sink_hdfs.conf 到 /data/jobs/project/ 目录下

# 启动flume

cd /export/software/apache-flume-1.6.0-bin/

bin/flume-ng agent -c conf -f /data/jobs/project/source_dir_sink_hdfs.conf -n a1 -Dflume.root.logger=INFO,console

# 复制文件

yes | cp /data/jobs/project/user_behavior_100W.csv /data/jobs/project/user/data_$(date +%Y%m%d%H%M%S)

# 验证flume是否成功采集到数据

hdfs dfs -ls /source/logs/

1

2

3

4

5

6

7

8

9

10

11

12

13

2

3

4

5

6

7

8

9

10

11

12

13

# mapreduce数据预处理

cd /data/jobs/project/

# 对 "数据分析" 目录下的maven项目 "mapreduce-job" 进行打包

# 编译打包mapreduce程序: mvn clean package -DskipTests

hadoop jar mapreduce-job-jar-with-dependencies.jar data_clean /source/logs/ /data/output/

hdfs dfs -ls /data/output/

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9

# mapreduce数据分析

cd /data/jobs/project/

# 上传 "mr.sh" 文件

sed -i 's/\r//g' mr.sh

sh mr.sh

hdfs dfs -ls /data/behavior/

hdfs dfs -ls /data/province_num/

hdfs dfs -ls /data/pv_category/

hdfs dfs -ls /data/pv_item/

hdfs dfs -ls /data/uv_day/

hdfs dfs -ls /data/uv_month/

hdfs dfs -ls /data/pv_day/

hdfs dfs -ls /data/pv_month/

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# 数据导入hbase

cd /data/jobs/project/

# 对 "write-to-hbase" 目录下的maven项目 "write-to-hbase" 进行打包

# 编译打包mapreduce程序: mvn clean package -DskipTests

java -cp write-to-hbase-jar-with-dependencies.jar org.example.write.hbase.HdfsToHbaseBatchImporter

# 使用命令进入hbase shell终端,进行校验: /export/software/hbase-2.2.7/bin/hbase shell

# scan 'behavior',{FORMATTER => 'toString'}

# scan 'province_num',{FORMATTER => 'toString'}

# scan 'pv_category',{FORMATTER => 'toString'}

# scan 'pv_item',{FORMATTER => 'toString'}

# scan 'uv_day',{FORMATTER => 'toString'}

# scan 'uv_month',{FORMATTER => 'toString'}

# scan 'pv_day',{FORMATTER => 'toString'}

# scan 'pv_month',{FORMATTER => 'toString'}

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# 启动可视化

cd /data/jobs/project/

# 对 "可视化" 目录下的maven项目 "springboot-demo" 进行打包

# 编译打包mapreduce程序: mvn clean package -DskipTests

java -jar /data/jobs/project/springboot-demo-1.0-SNAPSHOT.jar com.example.hbasedemo.HBaseDemoApplication

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9