基于spark的分布式实时日志分析与入侵检测系统项目

舟率率 5/9/2025 pythonsbtkafkaredisflumeflaskjava

# 项目概况

# 数据类型

模拟数据

# 开发环境

centos7

# 软件版本

python3.8.18、hadoop3.2.0、spark3.1.2、sbt1.9.9、scala2.12.18、flume1.6.0、kafka2.8.2、redis6.2.9

# 开发语言

python、Scala、Java

# 开发流程

数据上传(hdfs)->训练预测(spark)->实时数据分析(spark)->数据存储(kafka及redis)->后端(flask)->前端(html+js+css)

# 操作步骤

# python安装包

pip3 install redis==3.5.0 -i https://pypi.tuna.tsinghua.edu.cn/simple/

pip3 install Flask_SocketIO==5.5.1 -i https://pypi.tuna.tsinghua.edu.cn/simple/

pip3 install requests==2.31.0 -i https://pypi.tuna.tsinghua.edu.cn/simple/

pip3 install "urllib3<2.0.0" --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple/

1

2

3

4

5

6

2

3

4

5

6

# spark打包

mkdir -p /data/jobs/project/

cd /data/jobs/project/

# 上传 spark文件夹 到 /data/jobs/project/ 目录

cd /data/jobs/project/spark/

# sbt 打包

sbt clean assembly

# 打包完成后执行

# 将 "spark/target/scala-2.12/" 目录下的 "logv_compute-assembly-0.1.jar"文件 移动到 "/data/jobs/project/" 目录

yes | cp /data/jobs/project/spark/target/scala-2.12/logv_compute-assembly-0.1.jar /data/jobs/project/logvision.jar

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

2

3

4

5

6

7

8

9

10

11

12

13

14

15

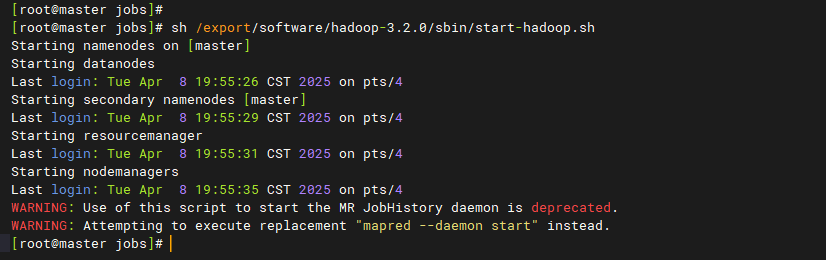

# 启动Hadoop

# 离开安全模式: hdfs dfsadmin -safemode leave

# 启动hadoop

bash /export/software/hadoop-3.2.0/sbin/start-hadoop.sh

1

2

3

4

5

2

3

4

5

# 启动kafka

# 启动zookeeper

sh /export/software/kafka_2.12-2.8.2/bin/zookeeper-server-start.sh -daemon /export/software/kafka_2.12-2.8.2/config/zookeeper.properties

# 启动kafka

sh /export/software/kafka_2.12-2.8.2/bin/kafka-server-start.sh -daemon /export/software/kafka_2.12-2.8.2/config/server.properties

# 创建topic

/export/software/kafka_2.12-2.8.2/bin/kafka-topics.sh --create --topic raw_log --replication-factor 1 --partitions 1 --zookeeper master:2181

# 启动消费者

/export/software/kafka_2.12-2.8.2/bin/kafka-console-consumer.sh --bootstrap-server master:9092 --topic raw_log

# 关闭kafka

# sh /export/software/kafka_2.12-2.8.2/bin/kafka-server-stop.sh

# 关闭zookeeper

# sh /export/software/kafka_2.12-2.8.2/bin/zookeeper-server-stop.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

2

3

4

5

6

7

8

9

10

11

12

13

14

# 启动redis

redis-server /export/software/redis-6.2.9/redis.conf

1

2

3

2

3

# 准备目录

mkdir -p /home/logv/

cd /home/logv/

touch /home/logSrc

# 解压 "datasets" 目录下的 "access_log.7z" 文件夹 到当前目录

# 上传 "datasets" 目录下的 "learning-datasets" 文件夹和 "access_log" 文件 到 "/home/logv/" 目录

# 上传到hdfs

hdfs dfs -mkdir -p /home/logv/learning-datasets/training/

hdfs dfs -rm -r /home/logv/learning-datasets/training/*

hdfs dfs -put /home/logv/learning-datasets/training/good.txt /home/logv/learning-datasets/training/

hdfs dfs -put /home/logv/learning-datasets/training/bad.txt /home/logv/learning-datasets/training/

hdfs dfs -mkdir -p /home/logv/learning-datasets/testing/

hdfs dfs -rm -r /home/logv/learning-datasets/testing/*

hdfs dfs -put /home/logv/learning-datasets/testing/good.txt /home/logv/learning-datasets/testing/

hdfs dfs -put /home/logv/learning-datasets/testing/bad.txt /home/logv/learning-datasets/testing/

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# flume启动

# 上传整个 "flume" 文件夹 到 "/data/jobs/project/" 目录

/export/software/apache-flume-1.6.0-bin/bin/flume-ng agent -n a2 -c conf -f /data/jobs/project/flume/standalone.conf -Dflume.root.logger=INFO,console

1

2

3

4

5

2

3

4

5

# 入侵检测模型训练与测试

cd /data/jobs/project/

spark-submit --master local[*] --class learning /data/jobs/project/logvision.jar

hdfs dfs -ls /home/logv/IDModel/

hdfs dfs -ls /home/logv/learning-datasets/training/

hdfs dfs -ls /home/logv/learning-datasets/testing/

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9

# 启动实时日志生成器

cd /data/jobs/project/

# 上传 "log_gen" 文件夹 到 "/data/jobs/project/" 目录

cd /data/jobs/project/log_gen/

javac log_gen.java

java log_gen /home/logv/access_log /home/logSrc 5 2

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9

# 启动实时分析任务

cd /data/jobs/project/

spark-submit --master local[*] --class streaming /data/jobs/project/logvision.jar

1

2

3

4

5

2

3

4

5

# 启动flask

需要自行申请百度地图的AK码

项目使用百度API,频繁调用易被禁用

地址: https://lbsyun.baidu.com/faq/api?title=webapi/ip-api-base

cd /data/jobs/project/

# 上传 "flask" 文件夹 到 "/data/jobs/project/" 目录

cd /data/jobs/project/flask/

python3 app.py

1

2

3

4

5

6

7

8

2

3

4

5

6

7

8